“One of the most salient features of our culture is that there is so much bullshit,” wrote Harry Frankfurt in his seminal 1987 essay On Bullshit. He bemoaned the rise of the “bullshitter” as somebody who was not interested so much in promoting false truths, but as someone who had no interest in the truth.

Unlike the liar who intends to propagate a false idea, the bullshitter is indifferent to whether or not their bullshit is true or false. They have “no conviction about what the truth might be” and “pays no attention” to what the truth could be. Instead, their bullshit serves as a distraction from the whole enquiry of truth seeking itself.

Frankfurt was a philosopher who was concerned with the definition of the bullshitter as an archetype and bullshit as a mode of speech. But I think this misses the power of bullshit. Its prevalence in our culture lessens its existence as an epistemological alternative to truth. It is instead an economic phenomenon with an awesome – perhaps lethal – comparative advantage when it comes to shaping general impressions, evoking emotions and building a brand.

This is all too well understood by perhaps one of the greatest bullshit artists of our age, Steve Bannon. “The real opposition is the media,” Bannon said in a 2017 interview, “and the way to deal with them is to flood the zone with shit.”

By launching messages or policies at a breakneck speed, the media is soon overwhelmed, only able to focus on a narrow slither of the onslaught. The second Trump administration has, indeed, been such a flood that even Republican lawmakers are complaining of “overwhelming sensory overload.”

This strategy takes advantage of the “bullshit asymmetry principle”, coined by the software engineer Alberto Brandolini in 2013. It states that “the amount of energy needed to refute bullshit is an order of magnitude bigger than to produce it.”

In the 14 years since, the cost of bullshit has dropped exponentially. The rise of Large Language Models (LLMs) – marketed as “artificial intelligence” – has created a sort of bullshit singularity: infinite bullshit at zero-cost.

ChatGPT, Claude, Grok and other “AI” chat-bats are able to spew endless reams of text, image and video which look true but are in fact packed with so-called “hallucinations”. These things look true but aren’t, yet the chatbot will insist they are.

This may be an endemic problem of LLMs, as larger and more advanced models are not more true. As three ethics scholars summarise, “ChatGPT is a bullshit machine”: “describing AI misrepresentations as bullshit is,” they say, an “accurate way of predicting and discussing the behaviour of these systems”.

The question of whether or not LLMs can ever not be bullshitters may be immaterial. It may well be that, thanks to Brandolini’s law, bullshit is strategically desirable. “Spam” content is pure, unadulterated bullshit, and has long been enormously profitable. It is now cheaper than ever to create at scale.

This is why Google Search, once a wonder of the internet world, has become increasingly useless. ChatGPT and other LLMs are being used to produce endless reams of spam that are optimised for SEO. Wired reports how these tools are being used to plagiarise entire publications by altering a few phrases, adding AI images and even translating it into foreign languages. Often these bullshit articles are ranked higher than the originals.

Google has been fighting back by changing their ranking algorithms, but they are far from winning the war. Search for health advice or recipes or DIY tips or childcare problems and Google is filled with oddly written articles filled with strange repetitious text, uncanny images and dubious advice. AI-generated slop is filling up the internet, and even Google can not hold back the tide.

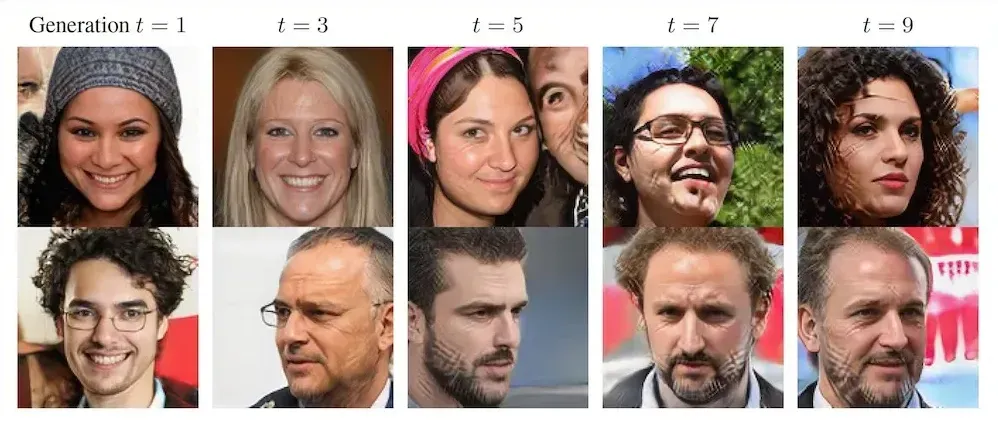

There is hope. The singularity engine powering LLMs has a fundamental flaw. Ironically, the bullshit machines work best when the internet is free of AI-created bullshit. But the near-zero cost of spam creation means that the intent could itself soon be almost exclusively slop.

A study from last summer put the figure at 57%, with the trend moving in one direction. As Protein previously covered with Dead Internet Theory, this is an existential threat to LLMs which are only as good as the data they are trained on. If AI-content includes the data, they develop a form of “mad” cow disease – a disease that originated in cows who were fed other cows. In just a few iterations, the bullshit machine breaks down.

This is why the data-sets fed into LLMs are so contentious. The economic value of the model is less the analysing software and more the material the software is reading. Yet, companies such as OpenAI do not pay for those reading materials, which is why IP-libraries such as The New York Times and music labels have filed lawsuits against them. If these lawsuits are successful and the chat-bots are starved of free material, it may well be that the economy of bullshit collapses; the endless engine of content turned off.

In this case, the resources of Silicon Valley might be turned away from a machine that creates something similar to the truth, but the truth itself.

| SEED | #8304 |

|---|---|

| DATE | 25.03.25 |

| PLANTED BY | RUPERT RUSSELL |

Discussion