Augmented Acoustics

Any hard surface is turned into a musical and visual interface with a new installation

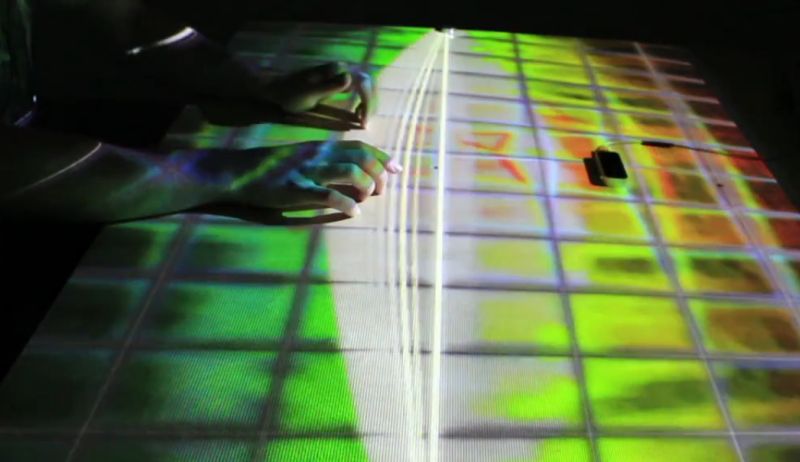

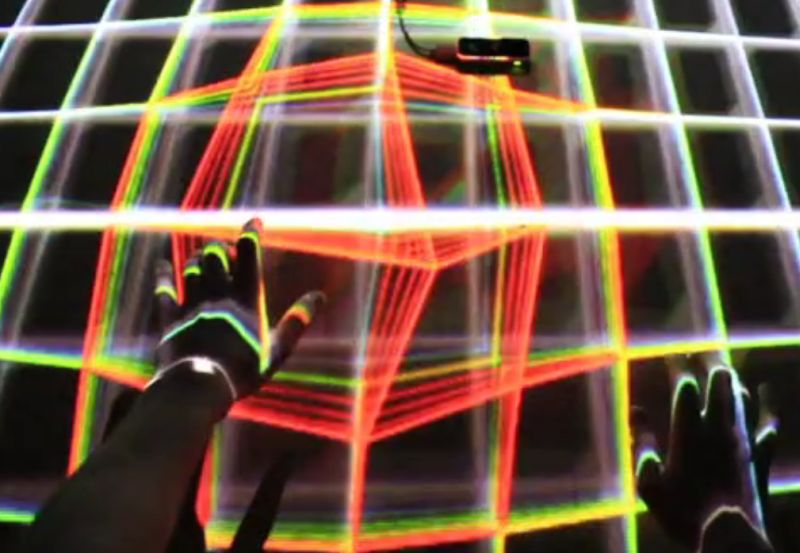

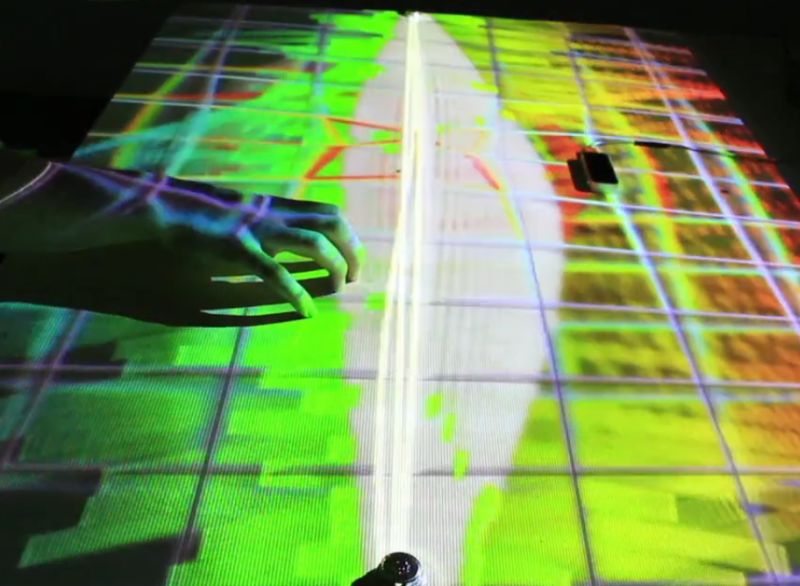

Cambridge University graduate and creative coder Felix Faire presents an acoustic research installation capable of turning any hard surface into an augmented musical and visual interface.

The installation, which was on display at a February Friday Late for the Royal Academy, used sonar and waveform analysis to recognise contact is made. Embedded contact microphones then produce a note from the original acoustic sound, with the wrist and fingernail triggering 808 kick and clap sounds, for example.

Audio can be recorded and played back with a loop pedal, while Leap Motion gesture control means sounds can be directed and manipulated with the hands. The projections are generated in line with the impulses and vibrations created by tapping the table, bringing audio and visual together in rich combination.

The project was part of research work for Interactive Architecture Lab, where Faire now studies Adaptive Architecture and Computation Masters.

Discussion